The Forensic Files API, Part 2

Video Diary

February 10, 2020

As outlined in my introductory post for this project, the first step to getting off the ground is to download all the Forensic Files episodes off of YouTube. Since naming things is hard, I opted to use the title of season 5, episode 18 for this particular project: Video Diary. Here's a quick plot summary of the episode, taken from the Forensic Files Wiki:

In 1998 when Lansing, Michigan convenience store employee Wanda Mason is found dead, having been shot at point blank range, investigators find that the entire murder has been caught on the store's videotape security camera.

But the image of the killer is so degraded that it seems impossible to positively identify him, until old-fashioned forensic science and space age technology come together to reveal the identity of the killer as Ronald Leon Allen.

Unless youtube-dl was designed by astronauts, I won't be using space age technology to accomplish this task. Without further ado, I'll give you the rundown on how I got everything downloaded.

Course of Action

I'm trying to adhere to Go standards, so I created a new directory, /internal/videodiary,

in my project. I'm using /internal because this functionality isn't considered part of the public

API (it's just some pre-work to get the data loaded).

I broke this project down into two parts:

- Get the episode URLs (somehow) and store them in a JSON file

- Loop through the episode URLs in the JSON file and download

I figured that if I could get all the episode URLs, the downloading part should be a breeze. I just needed some way to quickly get all the episode URLs for each season.

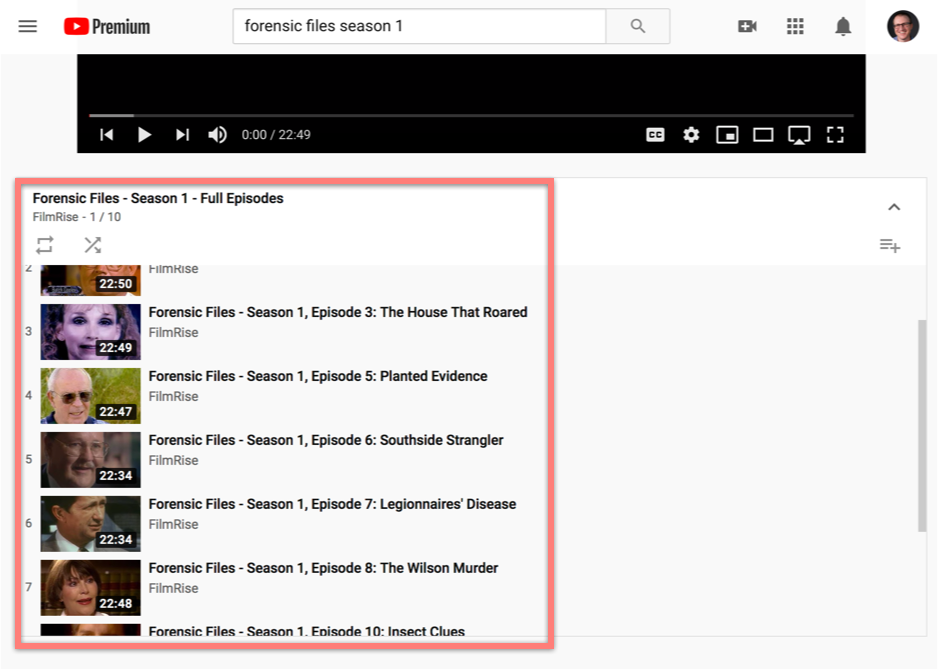

Getting and Storing the Episode URLs

I'm sure there's a bunch of snazzy ways to automate this process, but I opted for a manual approach. If you search for forensic files season 1 on YouTube and pick the first item in the result list (the FilmRise one), it'll start playing a playlist with all the season 1 episodes. There's a neat little scroll box with the episodes in the playlist. Here's a screenshot with the playlist highlighted with a tasteful salmon border:

Wouldn't it be neat to get the URL and title out of there without having to do a bunch of right-clicking and copy-pasting? It turns out that's pretty simple. I whipped up a little script and ran it in Chrome DevTools:

var endpointAnchors = document.querySelectorAll(

"a.yt-simple-endpoint.style-scope.ytd-playlist-panel-video-renderer"

);

var videos = [];

endpointAnchors.forEach(endpointAnchor => {

var videoTitleSpan = endpointAnchor.querySelector("#video-title");

videos.push({ name: videoTitleSpan.title, url: endpointAnchor.href });

});

console.log(JSON.stringify(videos));

If you run that snippet, a bunch of stuff gets spit out in the console as valid JSON that

you can copy and paste into a JSON file. I did this for each season and pasted the results into

/assets/youtube-links.json.

I ended up with something that looks like this:

{

"01": [

{

"name": "Forensic Files - Series Premiere: The Disappearance of Helle Crafts",

"url": "https://www.youtube.com/watch?v=wV8pNYh8diI&list=PLFtpZ659RpvGZvj342APoCEKmXNe24YEt&index=1"

},

{

"name": "Forensic Files - Season 1, Episode 2: The Magic Bullet",

"url": "https://www.youtube.com/watch?v=Bn8Oeae3j-c&list=PLFtpZ659RpvGZvj342APoCEKmXNe24YEt&index=2"

}

]

}

I should note that I had to do a bit of cleanup. Some lists had episodes from the previous season,

but it didn't take long to rectify that issue. I also separated the season, episode, and title

with a | character and got rid of the "Forensic Files - " text, so the final product ended up

looking like this:

{

"01": [

{

"name": "Season 1 | Episode 1 | The Disappearance of Helle Crafts",

"url": "https://www.youtube.com/watch?v=wV8pNYh8diI&list=PLFtpZ659RpvGZvj342APoCEKmXNe24YEt&index=1"

},

{

"name": "Season 1 | Episode 2 | The Magic Bullet",

"url": "https://www.youtube.com/watch?v=Bn8Oeae3j-c&list=PLFtpZ659RpvGZvj342APoCEKmXNe24YEt&index=2"

}

]

}

Also, some episodes were missing, so I had to do manual searches for them. When it was all said and done, I was able to find 390 out of the 398 total episodes. This is all the information we need to start downloading episodes. Let's move on to the actual downloading process.

Downloading the Episodes

I'm using Go whenever I can because I'm trying to improve my skills with the language.

I wrote some boilerplate code to read the youtube-links.json file and created a slice of episode objects.

Some code I wrote is shown below. I omitted the readYouTubeLinksJSON() method because that's just

run-of-the-mill JSON parsing code.

type episode struct {

Title string

SeasonNumber int

EpisodeNumber int

VideoHash string

}

func parseEpisodesFromJSON() []*episode {

log.Info("reading JSON file with YouTube URLs")

jsonContents, err := readYouTubeLinksJSON()

if err != nil {

log.WithField("error", err).Fatal("Error reading YouTube URLs file")

}

var allEpisodes []*episode

for season, jsonEpisodes := range jsonContents {

for i, jsonEpisode := range jsonEpisodes {

seasonNumber, _ := strconv.Atoi(season)

nameItems := strings.Split(jsonEpisode.Name, " | ")

ep := &episode{

Title: nameItems[2],

SeasonNumber: seasonNumber,

EpisodeNumber: i + 1,

VideoHash: extractHash(jsonEpisode),

}

allEpisodes = append(allEpisodes, ep)

}

}

return allEpisodes

}

func extractHash(ep jsonEpisode) string {

if ep.URL == "" {

return ""

}

parsedURL, _ := url.Parse(ep.URL)

q := parsedURL.Query()

return q.Get("v")

}

As far as downloading them goes, I just loop through each episode in my slice and

download it to /assets/videos directory with youtube-dl:

func DownloadEpisodes() {

// Try calling `cmd.Run()` where the command is `youtube-dl --version`.

// If it fails, bail the program (youtube-dl isn't installed).

checkForYouTubeDL()

allEpisodes := parseEpisodesFromJSON()

for _, ep := range allEpisodes {

if ep.VideoHash != "" {

downloadEpisode(ep)

}

}

}

func downloadEpisode(ep *episode) {

// Find the full path to the output file

// e.g. `.../assets/videos/season-1/01-11-outbreak.mp4`:

outPath := outputFilePath(ep)

// crimeseen is a package within /internal that has commonly used

// paths and utility functions.

if crimeseen.FileExists(outPath) {

return

}

log.WithFields(logrus.Fields{

"season": ep.SeasonNumber,

"episode": ep.EpisodeNumber,

"title": ep.Title,

"path": outPath,

}).Info("Downloading video from YouTube")

// No additional flags are needed for youtube-dl.

// Almost all of the files are in `.mp4` format.

cmd := exec.Command("youtube-dl",

"-o", outPath,

ep.VideoHash)

cmd.Stdout = os.Stdout

cmd.Stderr = os.Stderr

err := cmd.Run()

if err != nil {

log.WithFields(logrus.Fields{

"error": err,

"title": ep.Title,

"path": outPath,

}).Error("Error downloading video")

}

// We're hedging our bets here to make sure we don't exceed

// some kind of rate limit:

log.Println("Download successful, waiting 1 minute")

time.Sleep(time.Minute * 1)

}

For better context, check out the full file in the GitHub repo.

To call the DownloadEpisodes function, I used kingpin

to create a simple command line application named alibi. All internal functionality is called

from the /cmd/alibi/alibi.go file.

Once everything was ready, I ran this command at around 9pm and headed off to bed:

go run ./cmd/alibi/alibi.go videodiary

When I woke up the next morning, I found that about 290 episodes were successfully downloaded. For some reason my internet connection dropped around 4am. Since I added a check to skip the download if the video file already existed, I just re-ran the command and finished the download in a couple of hours.

With all of my videos ready to go, I need to extract the audio from each one for speech-to-text processing. I'll cover that in the next post. Stay tuned!